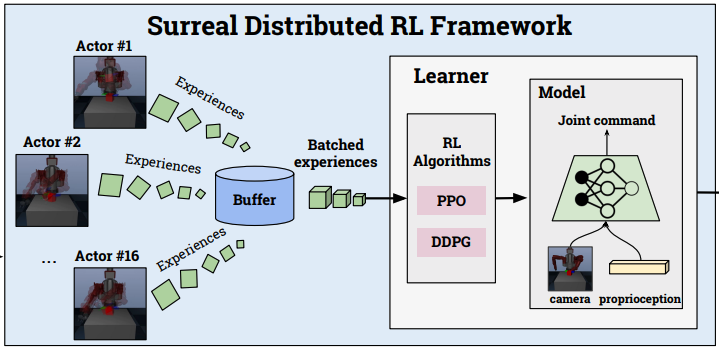

Reproducibility has been a significant challenge in deep reinforcement learning and robotics research. Open-source frameworks and standardized benchmarks can serve an integral role in rigorous evaluation and reproducible research. We introduce SURREAL, an open-source scalable framework that supports stateof-the-art distributed reinforcement learning algorithms. We design a principled distributed learning formulation that accommodates both on-policy and off-policy learning. We demonstrate that SURREAL algorithms outperform existing open-source implementations in both agent performance and learning efficiency. We also introduce SURREAL Robotics Suite, an accessible set of benchmarking tasks in physical simulation for reproducible robot manipulation research. We provide extensive evaluations of SURREAL algorithms and establish strong baseline results.